AI

-

AI

AIUpside Down: Is the UK's Tech Future Being Sold Off?

Kaj Siebert •On the surface, the recent wave of US tech investment in the UK, headlined by a £31 billion "Tech Prosperity Deal," looks like a resounding vote of confidence. Announcements from giants like Microsoft, Nvidia, and Google promise to build the very fo… -

AI

AICarrying Your Own MIMIR – Knowledge That Meets You Where You Are

Kaj Siebert •At the intersection of myth and modern technology lies MIMIR, a whimsical project I've been developing, inspired by the Norse figure renowned for his boundless wisdom. In legend, Odin carried Mímir’s severed head to receive secret counsel; in our ag… -

AI

AIAI as a Virtual Colleague: Embracing Neurodiversity and Navigating the Risks of 'Shadow Intelligence'

Kaj Siebert •In the last couple of years I've found myself changing how I use AI from what started as a somewhat novelty replacement for Google, to now treating it as a virtual colleague. Instead of simply using it to answer questions and look up (make up?) fact… -

AI

AIAI and Creativity: Inspiration, Reuse, and the Value of a Copy

Kaj Siebert •Inspiration rarely arrives in a vacuum. Whether it's a young musician riffing on a jazz standard, a painter reinterpreting a classical motif, or a coder learning from open-source projects, creativity often begins with reuse. The act of drawing fro… -

AI

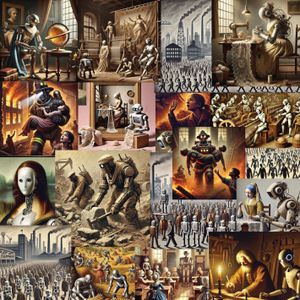

AILabour Reimagined: From Hand to Automation

Kaj Siebert •In recent years, AI has become a familiar tool in many workplaces, helping with tasks from drafting emails to writing code. This rapid progress raises an intriguing question for many of us: "Could AI eventually become better at our jobs than we are…