Who Gets to Use the Grid?

Earlier this week I found myself in a seminar room at the UCL Knowledge Lab, arguing - very productively - about power, epistemology, and whether anyone in the room could agree on what “federated” actually means. The occasion was a workshop run by Allison Littlejohn, Eileen Kennedy and the Inclusive Futures project team, part of a longer effort to shape the future of the UK’s National Federated Computing and Storage (NFCS) infrastructure. Which is a mouthful, I know, but stay with me.

Why I Cared Enough to Show Up

My excuse for being in the room was that I inhabit many different worlds at once. Over the years I’ve worked closely with teams in the children’s social care sector - including Data to Insight, a national programme helping children’s services across England use data more effectively. My other hat involves science outreach at European and international music festivals with CERN and The Big Bang Collective. Neither of these sounds like it has an obvious stake in research computing infrastructure. Both of them do.

The social sector angle. Work like what Data to Insight does doesn’t require the kind of computing power that keeps astrophysicists up at night. They’re not simulating galaxy formation. What they need is something that’s arguably harder to build: infrastructure that’s hyperlocal - close enough to the data to satisfy strict privacy requirements - but that also scales nationally and speaks a common enough language across 150+ local authorities, each with their own systems, their own cultures, and their own definitions of even fairly basic terms.

What struck me years ago, crossing from a background in astrophysics into the social sector, is that the tools are almost identical. The languages are completely different. An analyst in a London borough trying to understand outcomes for looked-after children and a researcher modelling stellar atmospheres in Chile are both wrestling with large, messy, incomplete datasets, trying to make robust inferences under uncertainty. They just have entirely different vocabularies, and - crucially - entirely different infrastructure either supporting or failing to support their work.

The outreach angle. Having seen what the ORBYTS programme at UCL manages to do - putting secondary school students to work on actual research problems alongside professional scientists - I’ve become convinced that national research infrastructure should think seriously about pre-university students as real users, not a bolt-on for the education team to worry about separately.

On more than one occasion I’ve talked to teenagers who genuinely love programming, but who say “what’s the point - AI will do all of that for us in the future.” They can’t see how they could ever be leading rather than following these systems. And when you’re sitting in a classroom, the road to an observatory high in the Atacama Desert feels like an insurmountable journey. But giving pupils an early taster of how the work is actually done - letting them touch the tools, contribute to real questions - starts to make that journey feel possible.

If we want to show young people that research is something they can participate in, the infrastructure needs to be something they can actually reach.

The Axes

The workshop was well structured, opening with a framing from Francisco Durán del Fierro that gave the whole day its shape: two axes along which you can map almost any research infrastructure system.

The first is power - how centralised or distributed is control? Does one body set the rules, own the resources, and grant access? Or is that authority spread across many independent actors with their own governance?

The second is epistemic culture - which sounds like jargon but means something fairly intuitive. How much does the infrastructure assume everyone works the same way? Does it serve a community with shared methods and a shared vocabulary, or does it need to accommodate very different disciplines, sectors, and ways of knowing?

I spend a lot of my professional life crossing epistemic boundaries. Moving between astrophysics, data science, and children’s social care means constantly adjusting not just vocabulary but entire mental models - and occasionally, yes, whether to wear a shirt. The observation that infrastructure itself encodes these assumptions, and that some communities therefore have to do far more translation work just to use it, felt immediately recognisable.

What Does “Federated” Actually Mean?

Honestly? The first session was largely spent establishing that none of us were quite sure. But through a series of exercises, Steve Earl and the team from the Edinburgh Futures Institute helped the group work through what federation might actually mean in practice.

Lots of related ideas kept surfacing - interoperability, shared standards, distributed resources, common governance frameworks - but exactly how they have to fit together to constitute a genuinely federated system took a while to pin down. Which was fine. Working through that kind of definitional fog in a structured way is exactly what workshops like this are for.

The two examples that anchored the discussion most usefully were ones everyone in the room already uses daily. Email is a beautifully federated system: a shared standard, completely decentralised implementation, many competing providers, and yet I can send a message from my own server to yours without either of us caring what software the other is running. eduroam - the wifi network that lets researchers connect at universities across Europe and beyond - is a more targeted but equally elegant example: federated identity, shared trust framework, local infrastructure. You walk into a university you’ve never visited and the wifi just works. That’s not magic, it’s federation done well.

Both examples gave the group something concrete to reason from, which helped enormously when things got more abstract.

The Four Quadrants

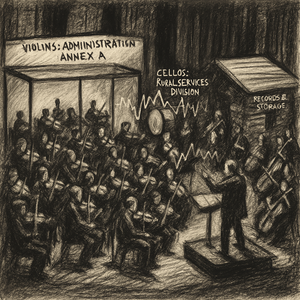

The bulk of the day was spent working through what systems look like at different combinations of those two axes. The space divides naturally into four quadrants, and the exercise was to find real anchors for each.

Centralised power, epistemically homogeneous (A): Think of a university’s institutional HPC service - one steering body, a relatively uniform user community, a shared set of expectations about how you submit a job and what a result looks like. Highly optimised for that community; less so for anyone outside it. In the software world, this is Windows with its core Microsoft applications. Direction is set and led by Microsoft - centralised power - and although the users themselves are epistemically diverse, the infrastructure forces them to converge on a common language. We all had to learn what “The Ribbon” meant, something that had no office-tool connotation to any of us until Microsoft introduced it.

Centralised power, epistemically plural (B): The ONS Secure Research Service is a good anchor here. ONS controls accreditation, safe settings, and output checking - centralised power - but the researchers using it come from government, academia, industry, and the third sector, bringing very different methods and questions. The Linux kernel model, if you like: firm central governance, with a broad ecosystem built on top.

Decentralised power, epistemically homogeneous (C): Windows-in-the-wild. Microsoft sets the platform, but the actual system - the sprawling ecosystem of WinForms databases, Access applications, and Excel macros - is built and maintained locally, by thousands of organisations each encoding their own workflows into whatever the platform can run. The shared substrate holds it together; the practice fragments at every edge. Anyone who’s ever tried to migrate a council’s “system” onto something modern will recognise this immediately. In the HPC world, the Worldwide LHC Computing Grid (WLCG) plays a similar role - hundreds of independently operated sites across dozens of countries, but all serving particle physics, all running the same middleware stack, all structured around the same data model. Decentralised operations, shared epistemic culture.

Decentralised power, epistemically plural (D): The JavaScript ecosystem. No stable central governance, multiple incompatible “right ways” to do almost everything, constant churn in tools and conventions. In research computing, this looks like an early-stage cross-institution collaboration held together by ad hoc scripts, a shared Dropbox folder, and a great deal of goodwill. Also, if I’m honest, a pretty good description of my office - highly decentralised (stuff everywhere) and thoroughly epistemologically pluralistic (unorganised).

None of these is inherently better or worse. Each has communities it serves well and communities it quietly marginalises. The point of the exercise was to use that map to think about who shows up in each quadrant - who thrives, who has to do the translation work, and who eventually gives up and finds a workaround.

The Afternoon: Personas, Futures, and a Very Centrist Vote

The third session took a set of user personas - active users, prospective users, people who’d tried the system and disengaged - and mapped them against the four quadrant futures. Would they be encouraged or put off by more centralised infrastructure? By more standardised epistemic grammar?

Concentration was flagging a bit by that point, for most of us - not helped by the fact that we hadn’t had quite enough time in the earlier sessions to really inhabit those personas in depth. But the exercise still surfaced real tensions. Some user types were clearly more vulnerable to centralised power dynamics; others struggled most with epistemic standardisation that didn’t fit their methods.

And then came the vote on where participants wanted to see the system move. When the preferences were mapped across the axes, the average landed almost exactly in the centre.

Which, on reflection, is probably the right answer for infrastructure that genuinely needs to serve diverse communities - not a cop-out, but a recognition that the goal isn’t to optimise hard for any single quadrant but to hold multiple modes in productive tension.

A Personal Note: Why I Think This Matters Over the Next Fifteen Years

What follows is my view, not the workshop’s.

There’s a temptation to treat federated national research infrastructure as a concern for HPC specialists and policy teams - important, obviously, but not broadly relevant. I think that framing is wrong, and I want to be specific about why.

We’re living through a period of rapid concentration. The digital infrastructure that underpins research - and, increasingly, a lot of public service delivery - is consolidating into the hands of a small number of large commercial providers. That’s not a criticism of any particular company; it’s an observation about where compute, storage, and AI capacity actually lives, and who sets the terms. When the infrastructure is effectively someone else’s, so is the ability to determine pricing, data residency, acceptable use policies, and ultimately what kinds of questions are straightforward to ask.

Resilience and sovereignty, in this context, aren’t abstract geopolitical concepts. They have direct consequences for specific people doing specific work.

Consider an analyst in a local authority children’s services team trying to understand why a particular cohort of looked-after children isn’t reaching its potential - and whether an intervention is making a difference. She needs access to linked, privacy-respecting data. She needs infrastructure that operates within a legal framework she understands, that won’t restructure its pricing or change its terms next quarter, and that will still exist in a recognisable form in five years. She’s not doing big science. She’s trying to help children.

Now consider an astrophysicist trying to map the large-scale structure of the universe. She needs substantial compute, reproducible pipelines, and long-term data archiving - data that needs to be accessible and interpretable in twenty years, not just this grant cycle.

These feel like entirely different problems. The underlying need is the same: infrastructure you can rely on, that operates under governance you have some stake in, and that serves your actual work rather than requiring you to translate your work into whatever the infrastructure happens to be built for.

Building that - nationally, federally, for everyone from children’s services analysts to particle physicists - is genuinely hard. It requires exactly the kind of careful thinking about power, about whose epistemic culture gets encoded, and about who ends up doing the translation work, that the workshop was trying to do.

I found the speculative scenario approach - working across axes of power and epistemic culture, rather than around well-defined use-cases for specific personas - genuinely fresh. It’s not how I usually think about these problems, and it shifted something for me. Coming from a background where you start with a specific user and a specific need, it was useful to be forced to reason at a more structural level first.

I’m glad I was there. And I think the people in that room were working on something that will matter.

A shout out to Jeremy Yates for introducing me to the project.