The Galton Board and the Shape of Randomness

You’ve probably seen the bell curve before. It’s the smooth, rounded shape that often appears in graphs and charts, especially when people are trying to show what’s “normal” or average. You might have come across it in news stories, surveys, or even weather reports. It shows up when people are talking about averages, how typical something is, or how most things tend to cluster around the middle. The curve rises gently, peaks in the centre, and falls away again, forming a pattern that many people recognise even if they don’t know its name.

You may know it from exam results, where a few students score very high or very low, but most end up somewhere in the middle. You also see it in adult height, IQ tests, measurement errors in science, and how long people take to finish a task. The reason it appears so often is that many of these results don’t come from one single cause. Instead, they come from the combined effect of lots of small influences. In the case of height, that might be things like nutrition, genetics, childhood illness, and sleep. For exam performance, it might include how much sleep a student got, how nervous they were, how much they revised, and how well the questions happened to match what they studied. None of these factors on their own decide the final result, but when they all add together, the result often falls near the middle. That’s the basic pattern behind the bell curve — lots of small random effects pushing a value slightly up or slightly down, until most of the results cluster somewhere in between.

One way to think about it is this: imagine you’re adding up a lot of tiny pushes, each in a slightly different direction. Some push a little higher, some a little lower. If the pushes are random, that is: if there’s no consistent bias in one direction, then the highs and lows tend to cancel each other out.

Most of the time, you end up somewhere near the middle. Only rarely do you get enough pushes in the same direction to land far out on either side.

This assumes that all the pushes are roughly the same size. If one of them was huge and the others were tiny, the pattern wouldn’t be so smooth. But when the individual effects are similar and balanced, this kind of shape tends to emerge over and over.

To really understand where this shape comes from, it’s helpful to look at something simpler: the coin toss.

Imagine tossing a coin ten times. Each toss can land heads or tails. If you repeat this experiment over and over, keeping track of how many heads you get each time, you’ll start to see a pattern. Most of the time, you’ll get around five heads. Sometimes you’ll get four or six. It’s less common to get only one head or all ten. If you plot how often each result comes up, you’ll start to see a small version of the bell curve.

This is called a binomial distribution. It’s the probability pattern you get when something has just two possible outcomes — like heads or tails — and you repeat the same experiment a fixed number of times. The total number of heads depends on a series of independent, random choices. There’s no way to predict each individual result, but over time the totals settle into a clear, predictable shape.

Now imagine increasing the number of tosses. With one coin, you can only get heads or tails. With two coins, there are three possible results: zero, one, or two heads. With ten coins, there are eleven possible totals. With a hundred coins, there are 101. As the number of tosses grows, the shape of the distribution becomes smoother and more symmetrical. It starts to look more and more like that familiar bell curve.

This connection between simple binary choices and the bell curve is at the heart of what we call the Central Limit Theorem. But instead of staying in the abstract, we can see it play out physically using something called a Galton board.

The Galton board is a simple device that shows this process in action.

It’s a vertical board with a grid of small pegs arranged in rows. You drop a ball in at the top, and as it falls, it hits each peg and bounces left or right. Each bounce is a random choice, just like a coin toss. After passing through all the rows, the ball lands in one of several slots at the bottom.

If you only drop one ball, the result is unpredictable. It might land somewhere near the middle, or it might veer off to one side. But if you drop hundreds or thousands of balls, a clear pattern begins to appear. Most of the balls end up in the central slots, with fewer and fewer in the ones further out. The shape that forms is almost identical to the bell curve.

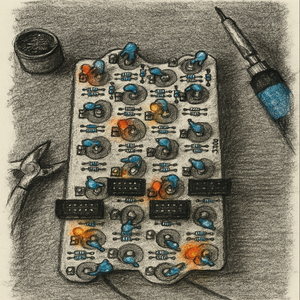

What’s happening is exactly what we saw with the coins. Each ball is making a series of random head-tails (or in this case left–right) choices. The final position depends on how many times it bounced to the right, just like the number of heads in a series of coin tosses. The balls are following a binomial distribution. And as with the coins, the more rows you have and the more balls you drop, the more clearly the bell-shaped pattern appears. Here we see the counts from the simulation vs the predicted values:

So the Galton board gives us a physical way to watch the binomial distribution at work — and to see how, with enough repetitions, the bell curve naturally appears.

The source code for the Galton board simulation is available on GitHub.